.png)

We can start your penetration test immediately after recieving the scope.

With affordable options such as our AI or hybrid model you can reduce costs.

We use senior level ethical hackers who look for real exploits and vulnerabilities.

We have engaged with almost every industry from SMB to large Enterprises

Protect sensitive patient data and connected medical systems from cyber threats. We help hospitals, healthcare platforms, and medical device manufacturers meet compliance and ensure patient safety through advanced penetration testing.

Safeguard your financial systems, APIs, and customer data. Our pentesting experts simulate real world attacks on banking apps, fintech platforms, and payment infrastructure to uncover vulnerabilities before criminals do.

From SaaS platforms to cloud native infrastructure, we identify weaknesses across your apps, APIs, Source Code, and environments helping you stay secure, compliant, and trusted at scale.

We secure critical systems, networks, and infrastructure against sophisticated adversaries. Our assessments strengthen national, state, and municipal cyber resilience with mission grade precision.

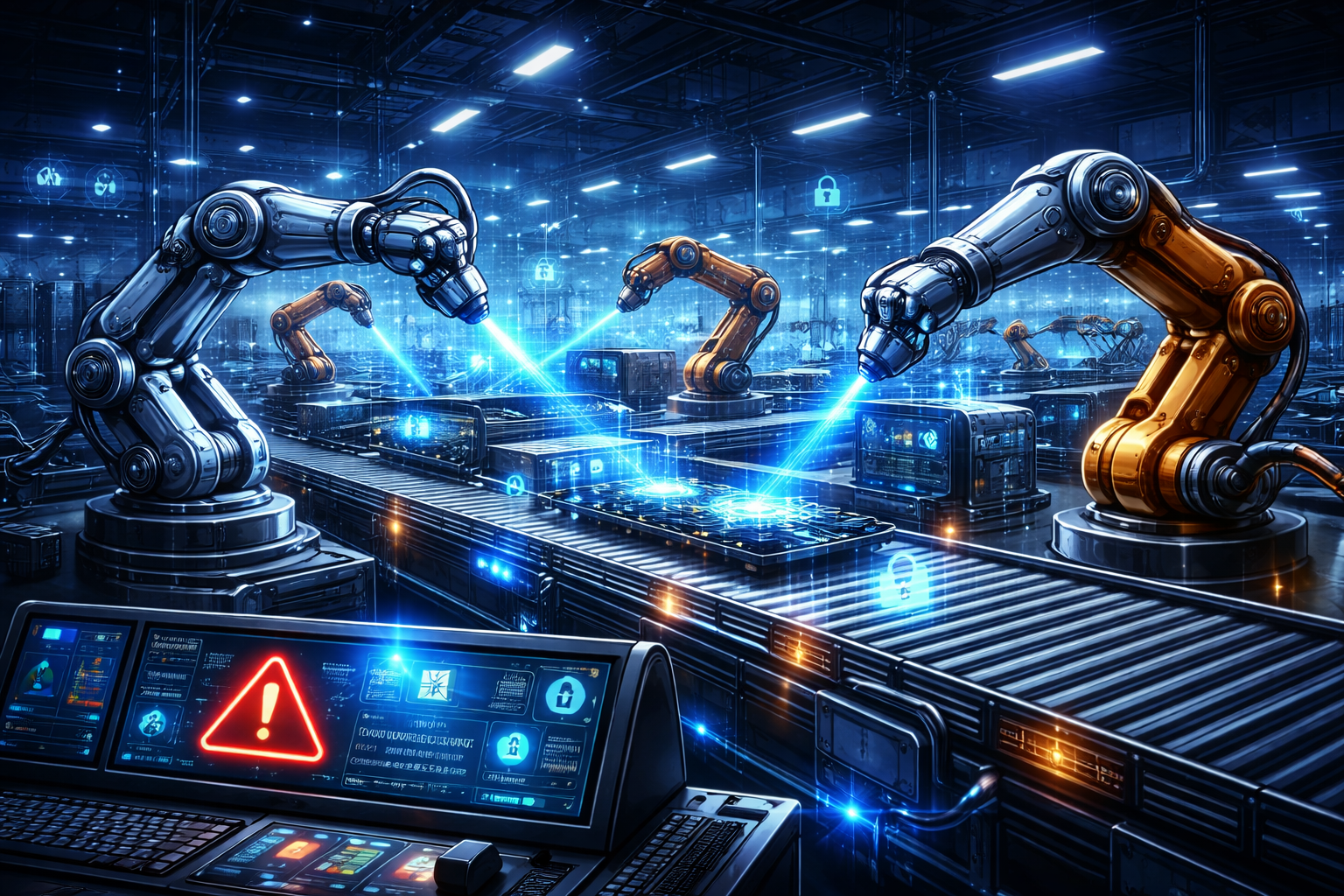

Protect your production lines, industrial control systems, IoT devices, and power plants from disruption. We test the full stack from OT networks to connected machinery to keep factories and power systems running securely.

Universities, schools, and e-learning platforms handle vast amounts of data. We help you prevent breaches, protect student information, and secure online learning environments through focused penetration testing.

We help our partners expand their offerings and deliver greater security value to their clients.

We Partner with Manage Service Providers to integrate our penetration testing services into their security offerings, providing clients with comprehensive testing and added value.

We partner with compliance platforms and auditing firms to help their clients meet penetration testing requirements for SOC 2, PCI, HIPAA, and ISO certifications.

We partner with vCISOs, security consultants, security awareness firms, and other resellers to enhance clients’ overall security posture.

A simple flow from scoping to testing, reporting, remediation verification, and closeout.

Define what needs testing, desired outcomes, start date, in-scope assets, access needs, and any compliance requirements.

Send the quote for review, confirm scope and dates, then get signature/approval to proceed.

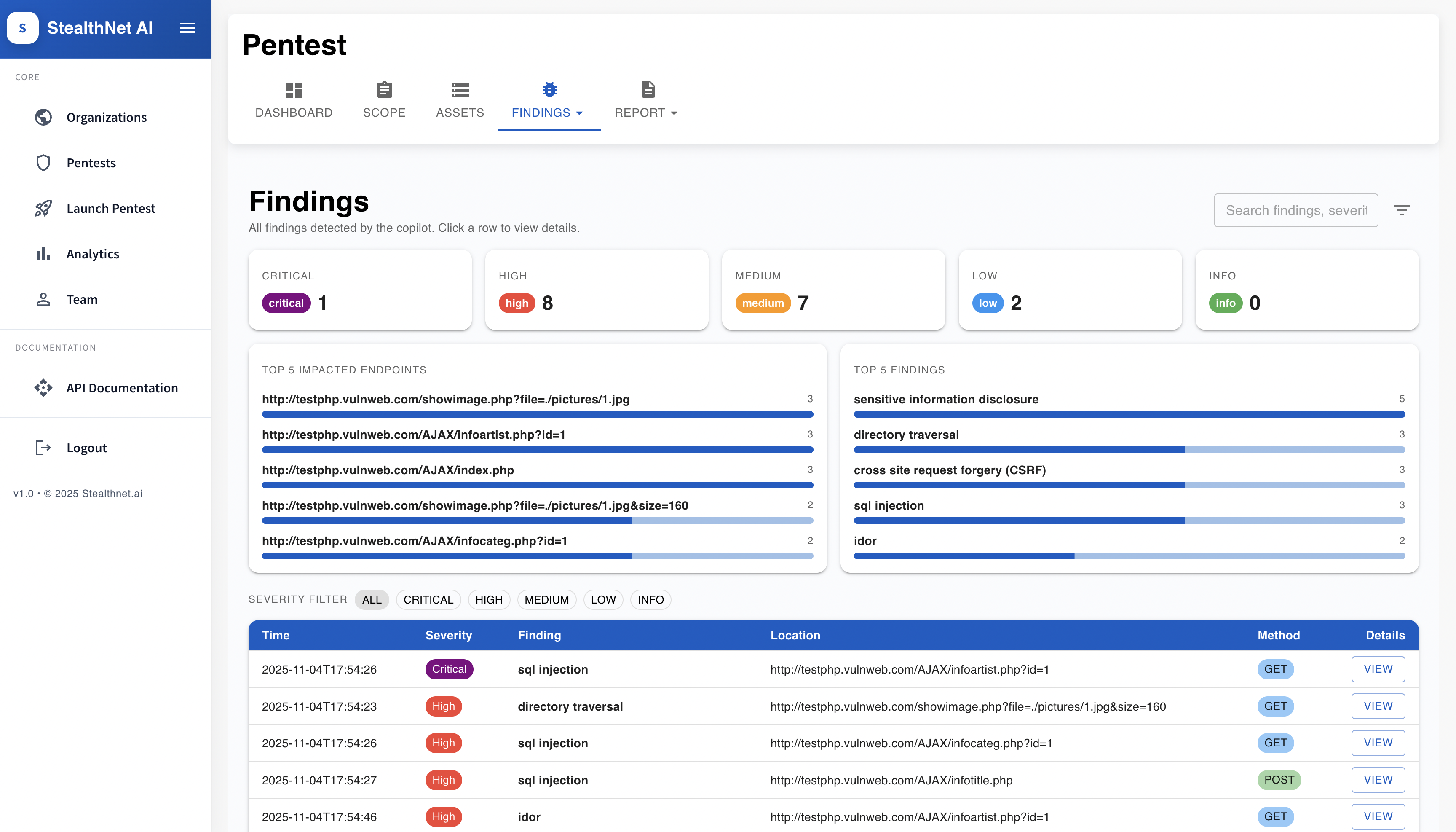

Assign the team, send a kickoff call/email, then begin testing in-scope assets to find vulnerabilities and validate exploitability.

Document findings with evidence and remediation guidance, then share the final report .

After you fix the issues, we re-test to confirm the vulnerabilities are resolved and no longer exploitable.

Final report delivered and any remediation results documented. Quick closeout, feedback, and next steps.

Pick between AI only, hybrid(AI + Human), or a manual(humans only) pentest.

.png)

.png)

.png)

.png)

.png)